Why are we writing tests? There are numerous reasons, but to me the primary one is that I can go into a codebase even after I have forgotten everything about it and make changes without fear of breaking 20 things at once.

One of the major antipatterns I see regularly is the dreaded testMethodWorks() testcase:

@Test

public void testCreateUser() throws Exception {

User user = userService.createUser("foo", "bar");

assertNotNull(user);

assertEquals(user.getUsername(), "foo");

assertEquals(user.getPassword(), "bar");

User invalidUser = userService.createUser("bla", "");

assertNull(user);

User someOtherTest = userService

.....

..(goes on for another 20 cases)...

}

The example is somewhat contrived, but you get the idea. A testcase that checks 30 different (marginally related) things and will potentially fail for even more reasons.

Of course that one testcase validates that the createUser() method works - and especially when a lot of setup is involved in your testcase it's convenient to just use the stuff that's already there.

But by doing so you are sacrificing a major benefit of tests: Readability through naming. If every testcase is simply named after the method it's testing, you end up with a completely useless test class that has exactly the same informative value as the class under test. Why would I bother reading the test if I could just look at the code that's doing stuff? It's probably shorter than the test case!

Imagine you come into a new codebase and whenever something breaks you first have to read through the test code. Looking at each jUnit stacktrace to figure out which assertion blew up - just so you can figure out what the test was actually doing and why that's a bad thing. Yikes.

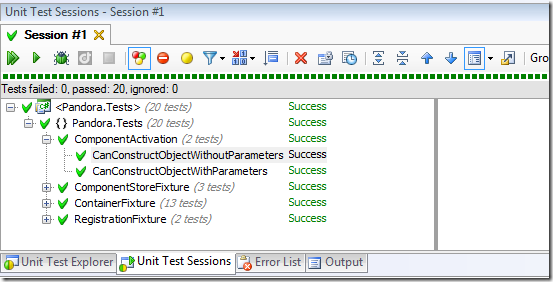

Now I won't advocate the "one assertion per test" mantra - that's going overboard and usually leads to unmaintainable tests. But at the very least group your tests not by method but by use case. If a test fails it should be for one reason and that reason damn well ought to be in the test name. Not because nobody likes to read code - but because the first thing each testrunner will report is the name of the test that failed.

It's much easier to figure out what is going on if you get a

testCreateUserWithoutAdminCredentialsReturns403ForbiddenStatusCode()

failure rather than a simple testCreateUser().

Seriously - I didn't even have to explain to you what my use case was - but if this test blows up you will immediately know it's a ACL issue and that it's manifesting itself by not returning a 403 StatusCode. If there was a second testcase called testCreateUserWithoutAdminCredentialsDoesNotInsertUserIntoDatabase you'll also not look at all the different corners of my repository why we got a record too many in some assertThat(repository.getAll().size(), equals(0)); but rather just ignore that failure as it's clearly an ACL issue not a database related thing. By splitting things into multiple testcases we also get the added benefit of predictable states. A test that did not correctly clean up some shared resource (in-memory-db etc..) will not create false positive in line 100 of your testMethodWorks() case but should be contained by your Transactional Testrunner or your setup/teardown methods.

So I propose three simple things that should always be in the testname - regardless of how the test is written or what you are testing:

- Method under test (

createUser)

- Context the test was run (

WithValidAdminCredentials)

- Expected outcome of the test (

ReturnsUserAsJson)

And you end up with createUserWIthValidAdminCredentialsReturnsUserAsJson and alongside you'd naturally get a second testcase called createUserWithValidAdminCredentialsInsertsUserIntoDatabase.

Keep that in mind and you'll make your life much easier for yourself when you have to update something in your codebase a few months down the road - once you have forgotten everything that was going through your head right now :)