Software design is hard. Not so much because it’s so hard to come up with, but because it takes a very long time to really hit the sweet spot where you really feel it’s good.

Doing this on your own is almost impossible, because you constantly have to switch off your personality and challenge the assumptions you just made when writing something.

I ask myself all the time “Is this module really right here? Should I break this up into smaller modules?” .. And frankly, I am the wrong person to answer that question since I made the mistake in the first place.

So I challenge myself all the time into the mindset of another person (be it a user, tester etc..) and try to forget what I was just thinking for a minute to decide if I’m still on track or not.

It’s like driving alone in a car through unknown terrain, you have to stop all the time and pull out the map to see if you’re still driving in the right direction or not.

And honestly, it sucks big time. Stopping is always bad. Dropping out of your “zone” and doing a complete context switch hurts the flow and I feel mentally exhausted very fast, and nothing seems to get done in the long run.

So, at my current project I decided that I can’t go on on my own. I tried multiple times to get to a good design through lots and lots of whiteboard, spiking and experimenting. But I never quite nailed it, I always felt like 20% away from the real thing, but with a burned bridge in front of me.

So, when I called my employer last Sunday I asked one thing “Do I get some budget to bring in a second pair of eyes to work on this particular problem?”. And the awesome answer was “Just do it and spare me the details.”.

Next day I called Harald Logar and he agreed to stop by and go through the code with me for a day. I gave him a very brief heads up on what I was working on (mind you, he’s a complete outsider to the project) and what problem I’m trying to solve.

When he came in next day I explained the vision, and showed him some tests I prepared before that should demonstrate the “desired” behavior of the system. After that little introduction, we were already implementing like crazy.

It was amazing! Although I was doing most of the typing, Harald was constantly there to challenge my assumptions, answer my questions and throw in his own ideas when necessary.

But what was really an game changer for me that day was that we were not only good, we went faster than I had ever done in the past. We rewrote the complete data access logic of a rather complex system in less then 2 hours (complex means dynamic proxies, caching and some non-trivial retrieval stuff).

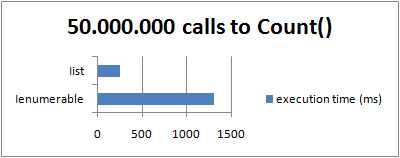

We then spent the rest of the day optimizing the system (performance is very critical for the project) and I think we both learned a great deal about the inner workings of .NET collections and how they work performance wise. (We also implemented a very cool cache solution that clears the cache on a background worker thread to avoid downtime)

So, needless to say I’d do this again any time. Harald was a joy to work with, and I think by the end of the day we were both very proud of what we accomplished.